Generative AI Video Timeline by Justine Moore

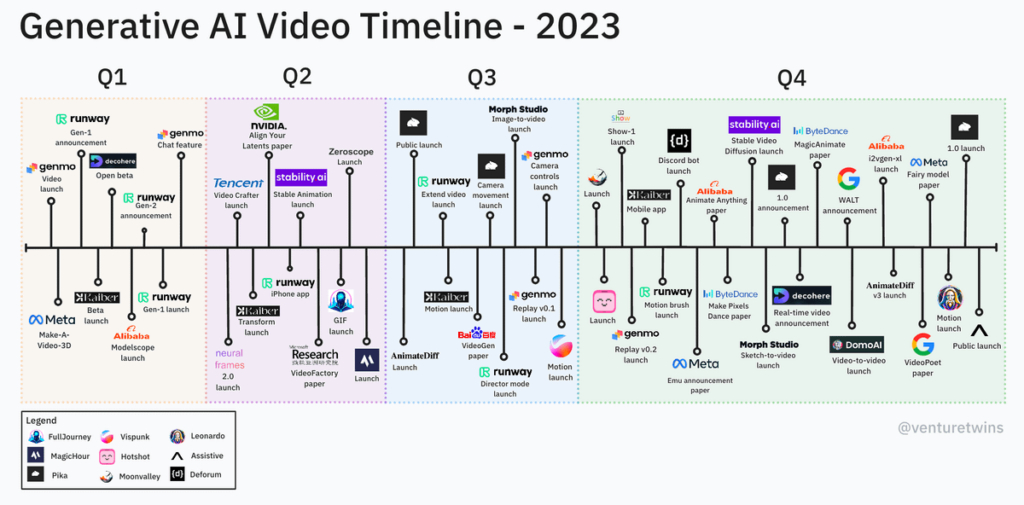

Shortly before the shift from 2023 to 2024, Justine Moore, partner at a16z, published a insightful thread 🧵 that explores the evolution of generative AI video and her take on its state and near-term future.

So, what’s next for AI video?

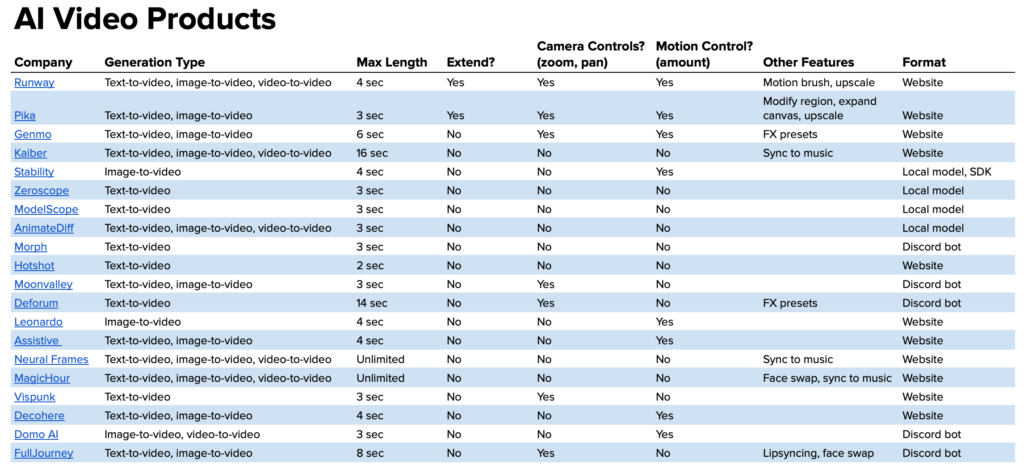

First of all, it’s a runaway category as pictured in Moore’s timeline. She is already tracking 21 promising players that have emerged in just one year, not including the array of up-and-coming startups not included in her list

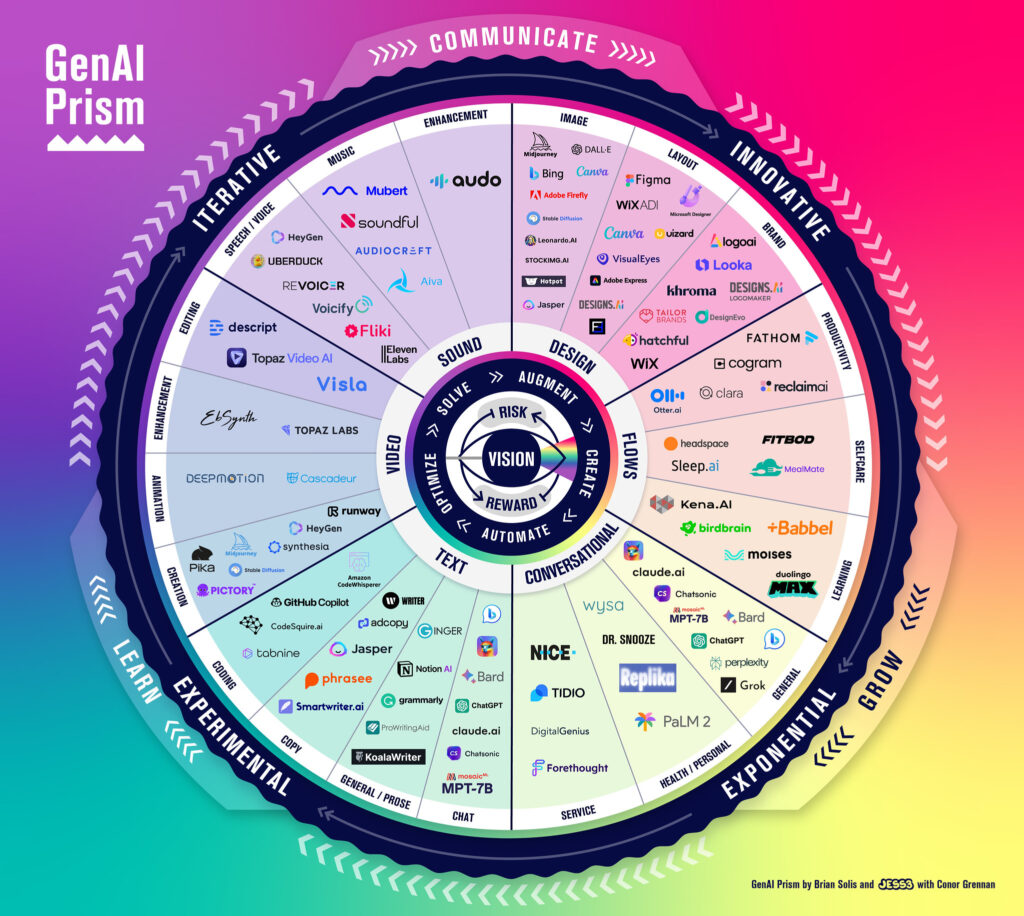

In fact, her work is inspiring how we prioritize the category in the next rev of our GenAI Prism (JESS3 + Conor Grennan)

What do these 21 companies share in common? According to Moore, most companies are focused on the following:

🔗 Temporal coherence – do characters/scenes stay consistent between frames?

🕹️ Control – can you control what happens + how the “camera” moves?

⏳ Length – can you make clips beyond a couple of seconds?

Moore also predicts integrated workflows on the horizon…

“Making quality video is a LOT of work, from scripting/storyboarding to editing, upscaling, sound…,” she observed. “Most products focus on pure generation – you stitch together outputs elsewhere.”

Her point is that creators need an end-to-end workspace!

Here’s one example of the extent creators have to piece together solutions today.

Behind the scenes on the creative process to get to one great shot 🎬

MidJourney → Magnific AI → RunwayML pic.twitter.com/kZycz4POgZ

— Ammaar Reshi (@ammaar) December 3, 2023

Her questions for 2024 are those we too should follow…

1) Will Meta and Google finally release their models?

2) What’s the role of open source? Many products are built on SD, but true foundation models tend to be closed.

3) Who will crack the data challenge? Quality, labeled video data is sparse.

Moore put together a public Google Doc that tracks the 21 AI video companies she’s tracking with links.

That’s it for this round! Stay tuned for the next edition Generative Insights in AI.